adding full lab 3

This commit is contained in:

parent

332503e5c9

commit

0856a901c9

8 changed files with 102 additions and 0 deletions

BIN

.DS_Store

vendored

BIN

.DS_Store

vendored

Binary file not shown.

BIN

3-remote-states/.DS_Store

vendored

Normal file

BIN

3-remote-states/.DS_Store

vendored

Normal file

Binary file not shown.

|

|

@ -111,3 +111,105 @@ variable "table_name" {

|

|||

}

|

||||

```

|

||||

|

||||

Now lets deploy these resources.

|

||||

|

||||

```bash

|

||||

tofu init

|

||||

tofu plan

|

||||

```

|

||||

|

||||

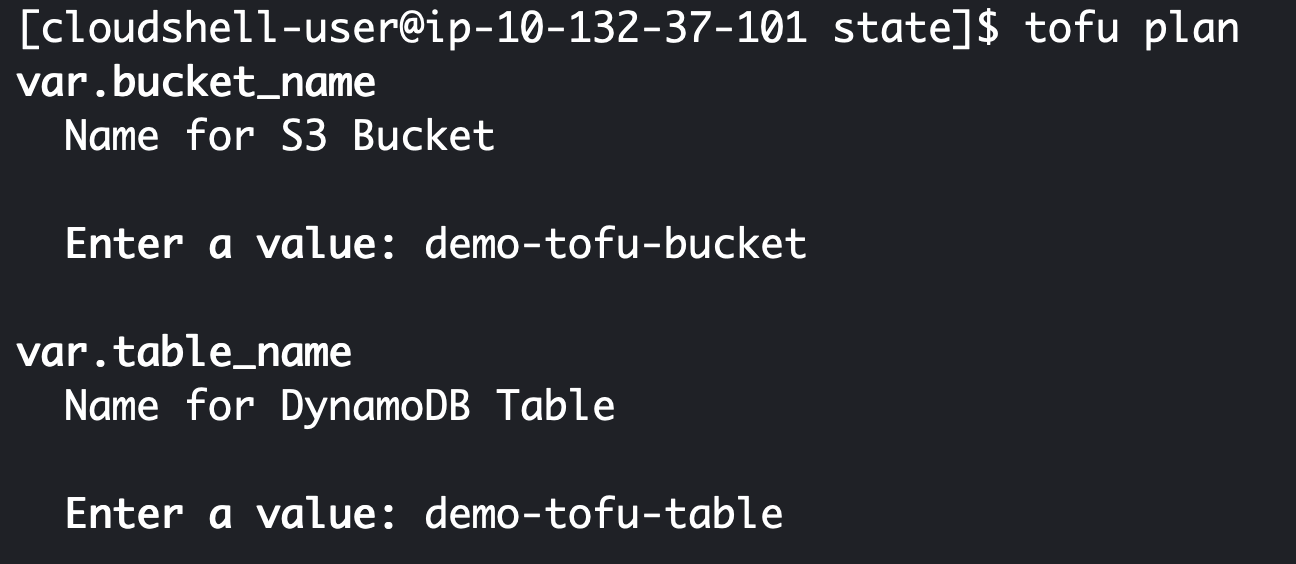

At this point you'll get prompted to enter and bucket and table name on the command line. As you can see I called mine **demo-tofu-bucket** and **demo-tofu-table**. You'll need to pick different values and remember them for the next part of this lab.

|

||||

|

||||

|

||||

|

||||

Once complete you should see two resources have been created sucessfully!

|

||||

|

||||

|

||||

|

||||

### Configuring remote state for a stack

|

||||

|

||||

Now lets use these resources and create our example stack again. We are going to edit the ```versions.tf``` file in our code directory:

|

||||

|

||||

```bash

|

||||

cd ../code

|

||||

vi versions.tf

|

||||

```

|

||||

|

||||

We'll add this code, but remember to change your ```bucket``` and ```dynamodb_table``` values to what you set in the last section.

|

||||

|

||||

```terraform

|

||||

backend "s3" {

|

||||

bucket = "demo-tofu-bucket"

|

||||

key = "terraform-tofu-lab/terraform.state"

|

||||

region = "eu-west-1"

|

||||

acl = "bucket-owner-full-control"

|

||||

dynamodb_table = "demo-tofu-table"

|

||||

}

|

||||

```

|

||||

|

||||

So the resulting file looks like this:

|

||||

|

||||

```terraform

|

||||

terraform {

|

||||

required_version = ">= 1.0"

|

||||

|

||||

required_providers {

|

||||

aws = {

|

||||

source = "hashicorp/aws"

|

||||

version = ">= 4.66.1"

|

||||

}

|

||||

}

|

||||

|

||||

backend "s3" {

|

||||

bucket = "demo-tofu-bucket"

|

||||

key = "terraform-tofu-lab/terraform.state"

|

||||

region = "eu-west-1"

|

||||

acl = "bucket-owner-full-control"

|

||||

dynamodb_table = "demo-tofu-table"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Now lets go ahead and use it. We start with:

|

||||

|

||||

```bash

|

||||

tofu init

|

||||

```

|

||||

|

||||

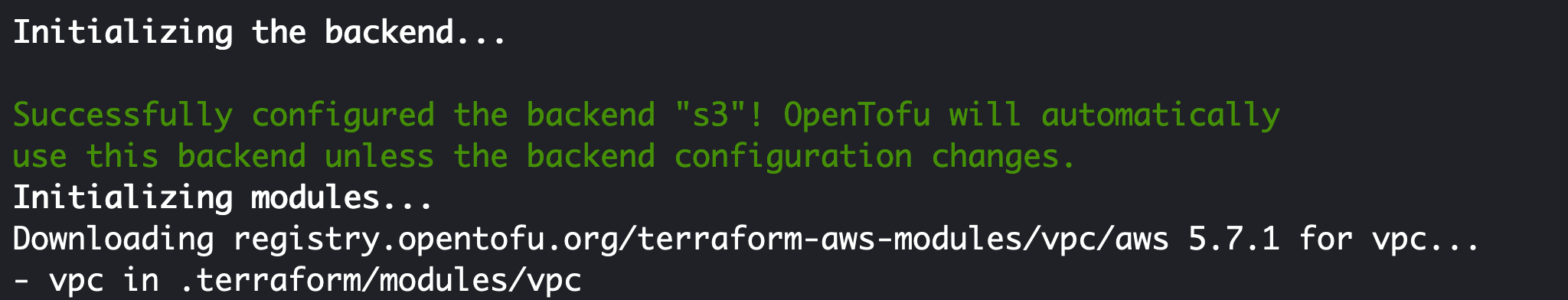

You should see that terraform/tofu automatically uses S3 as the new state backend as comfirmed here:

|

||||

|

||||

|

||||

|

||||

Now you are clear to run **plan** and **apply** and enter **yes** at the prompt.

|

||||

|

||||

```bash

|

||||

tofu plan

|

||||

tofu apply

|

||||

```

|

||||

|

||||

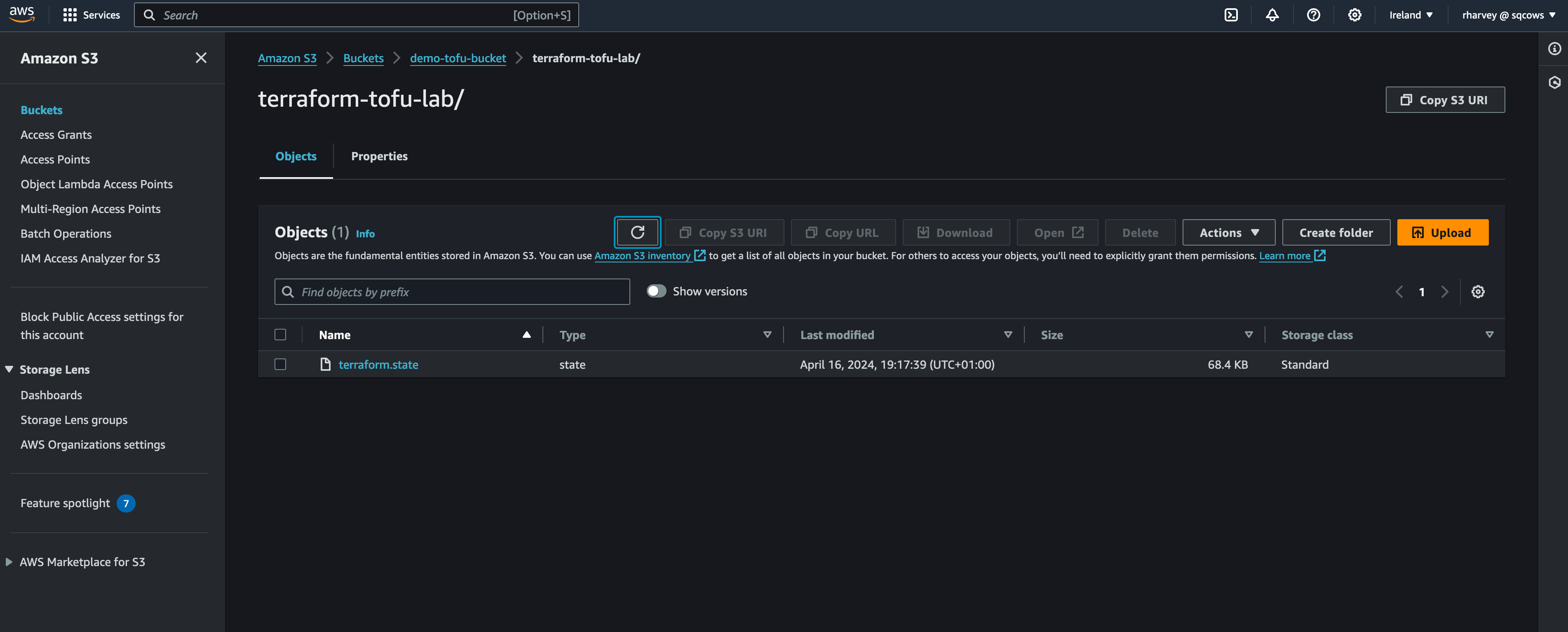

Now you'll notice theres no longer a ```terraform.state``` file clogging up you disk space on CloudShell and if you use your browser to go to the AWS console and the the S3 service followed by your bucket name you created you should see a ```terraform.state``` file in there.

|

||||

|

||||

|

||||

|

||||

You won't see much in dynamoDB unless you are currently in the middle of an **apply** but that value of ```LockID``` gets set when you are updating or deploying the stack to avoid others overwriting the file in S3.

|

||||

|

||||

Thats it you're done! You now have remote state configued for use on AWS using DynamoDB and S3, this means more than just you can operate your stack deployed by terraform/tofu which is essential for teams. If you want to lookinto other ways of storing state check out the documentation here: [https://opentofu.org/docs/language/state/remote-state-data/](https://opentofu.org/docs/language/state/remote-state-data/)

|

||||

|

||||

### Clean up

|

||||

|

||||

Lets clean up your resources now:

|

||||

|

||||

```bash

|

||||

tofu destroy

|

||||

```

|

||||

|

||||

Enter **yes** at the prompt. We'll leave your state S3 bucket and dynamoDB table there for now as we'll use it in lab 5.

|

||||

|

||||

### Recap

|

||||

|

||||

What we've learnt in this lab:

|

||||

|

||||

- Why remote state is important

|

||||

- How to create a bucket and dynamoDB table for use in storing state

|

||||

- How to use those resources to store state from another stack

|

||||

|

||||

|

|

|

|||

BIN

3-remote-states/img/.DS_Store

vendored

Normal file

BIN

3-remote-states/img/.DS_Store

vendored

Normal file

Binary file not shown.

BIN

3-remote-states/img/plan-prompt.png

Normal file

BIN

3-remote-states/img/plan-prompt.png

Normal file

Binary file not shown.

|

After

(image error) Size: 82 KiB |

BIN

3-remote-states/img/s3-state.png

Normal file

BIN

3-remote-states/img/s3-state.png

Normal file

Binary file not shown.

|

After

(image error) Size: 408 KiB |

BIN

3-remote-states/img/state-complete.png

Normal file

BIN

3-remote-states/img/state-complete.png

Normal file

Binary file not shown.

|

After

(image error) Size: 17 KiB |

BIN

3-remote-states/img/using-s3-state.png

Normal file

BIN

3-remote-states/img/using-s3-state.png

Normal file

Binary file not shown.

|

After

(image error) Size: 91 KiB |

Loading…

Add table

Add a link

Reference in a new issue